Introduction to Cloud Benchmarking

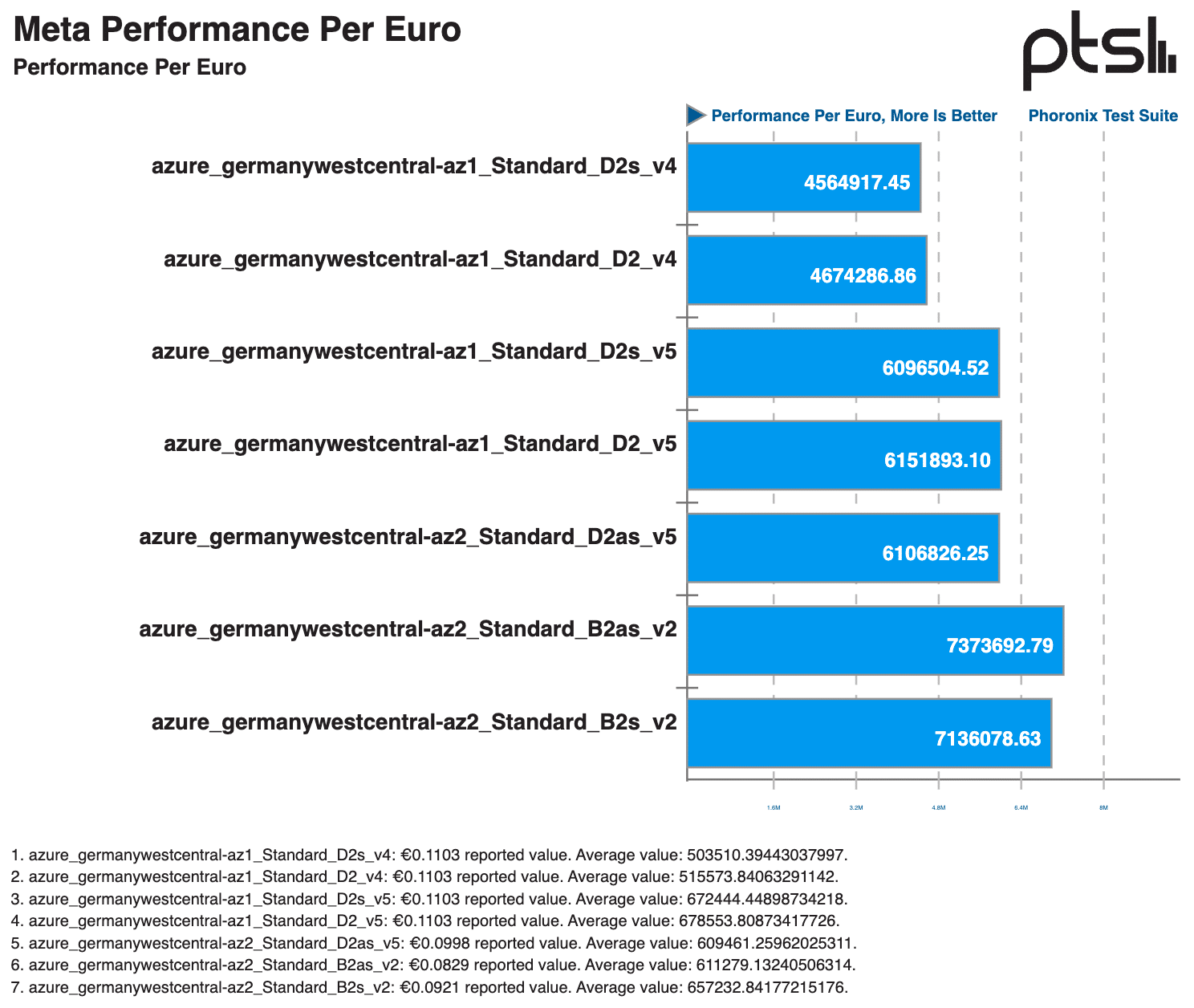

In our extensive cloud benchmark study, we compared the performance of Azure versus STACKIT Cloud virtual machines (VMs) while also evaluating cost efficiency and cloud infrastructure cost optimization. This methodology provides a fair comparison by accounting for varying specifications and performance capabilities of instance types relative to their costs.

Performance-to-Price Adjustment

To understand why this adjustment is essential, consider the following example: A Virtual Machine with 2GB RAM and 2 vCPUs could run on a powerful HPC server or a small Raspberry Pi. While the Raspberry Pi might be cheaper, its performance difference compared to the HPC server could outweigh the cost advantage. By evaluating the cost efficiency, the instance on the HPC server might ultimately deliver better value.

Methodology of Cloud Server Benchmarking

Tool Selection

We evaluated multiple benchmark tools and prioritized standard tools whenever possible. However, compatibility issues with STACKIT Cloud required creative solutions. Terraform for cloud infrastructure automation was selected as the “glue” between benchmark tools and cloud provider APIs, with additional bash scripts used for VM provisioning and result collection. Complex infrastructure setups were avoided, and only free, open-source benchmark tools were considered.

Scientific Approach

Our approach was designed to be as scientific as possible within time and cost constraints. Although the number of measures and runtimes were limited, benchmarks were run on a large set of instances to ensure reliable results. This scale allowed for the identification and reevaluation of single outliers, mitigating the impact of inaccurate data points. Aditionally, optimizing cloud costs was a key consideration when selecting benchmark parameters.

Real-World Server Benchmarks

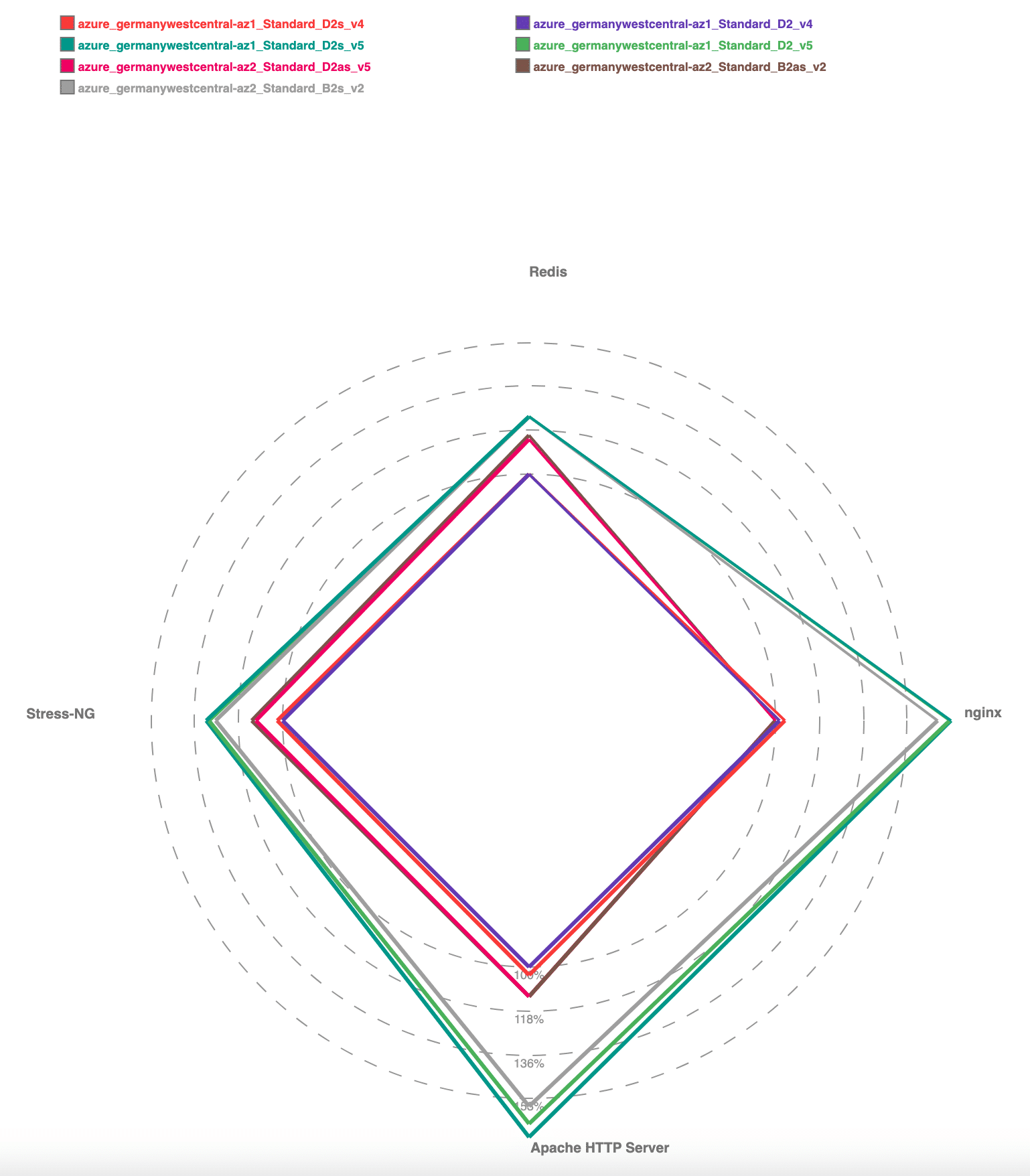

To ensure our benchmarks were representative of real-world performance, we tested commonly used server applications such as:

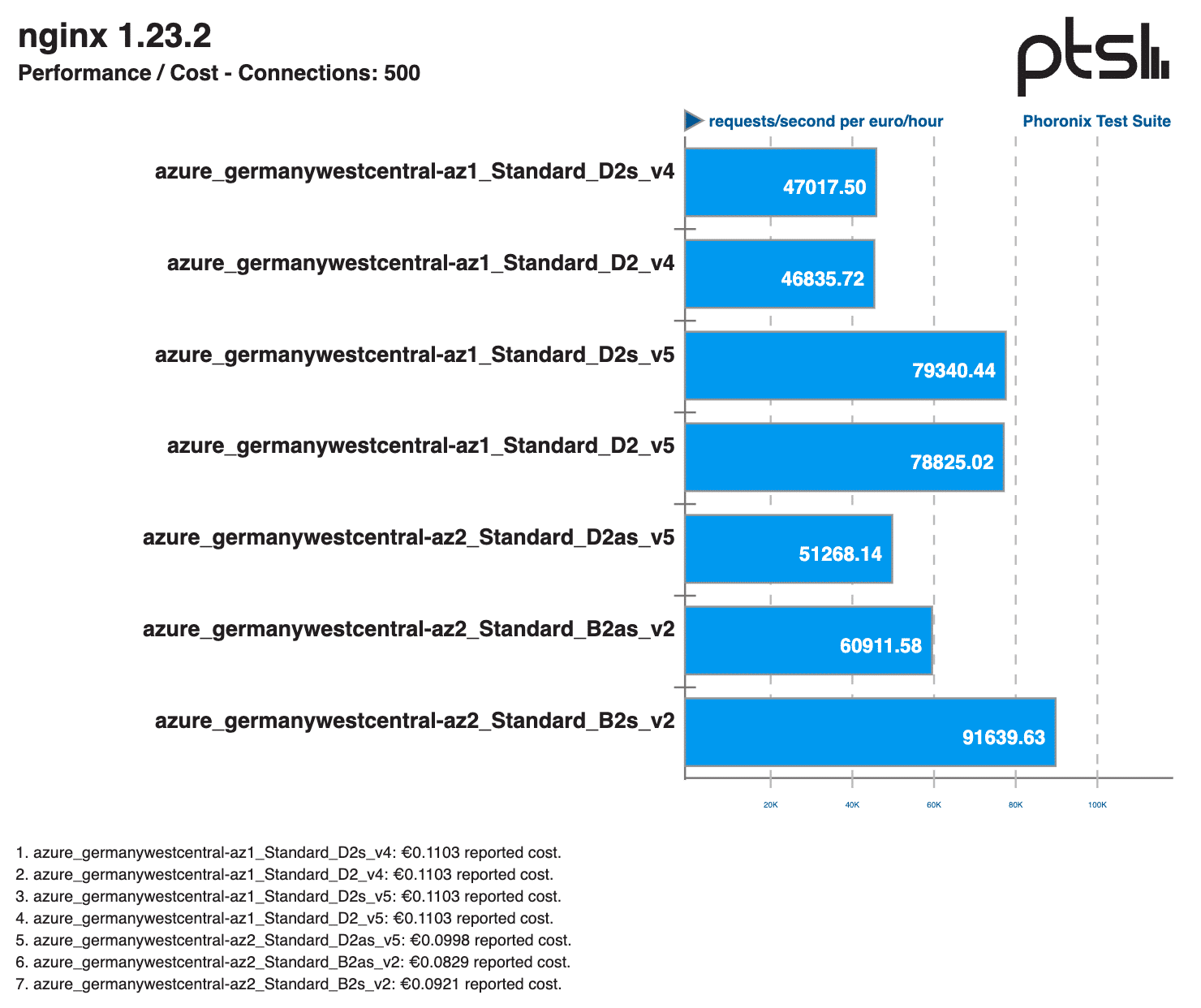

- Apache and Nginx for web server performance

- Redis for in-memory database performance

These tests helped evaluate how well the cloud instances handled real workloads, including HTTP request handling, Redis queries, and cache performance under load. By incorporating these tests, we provided practical performance insights beyond synthetic benchmarks.

Synthetic Benchmarks

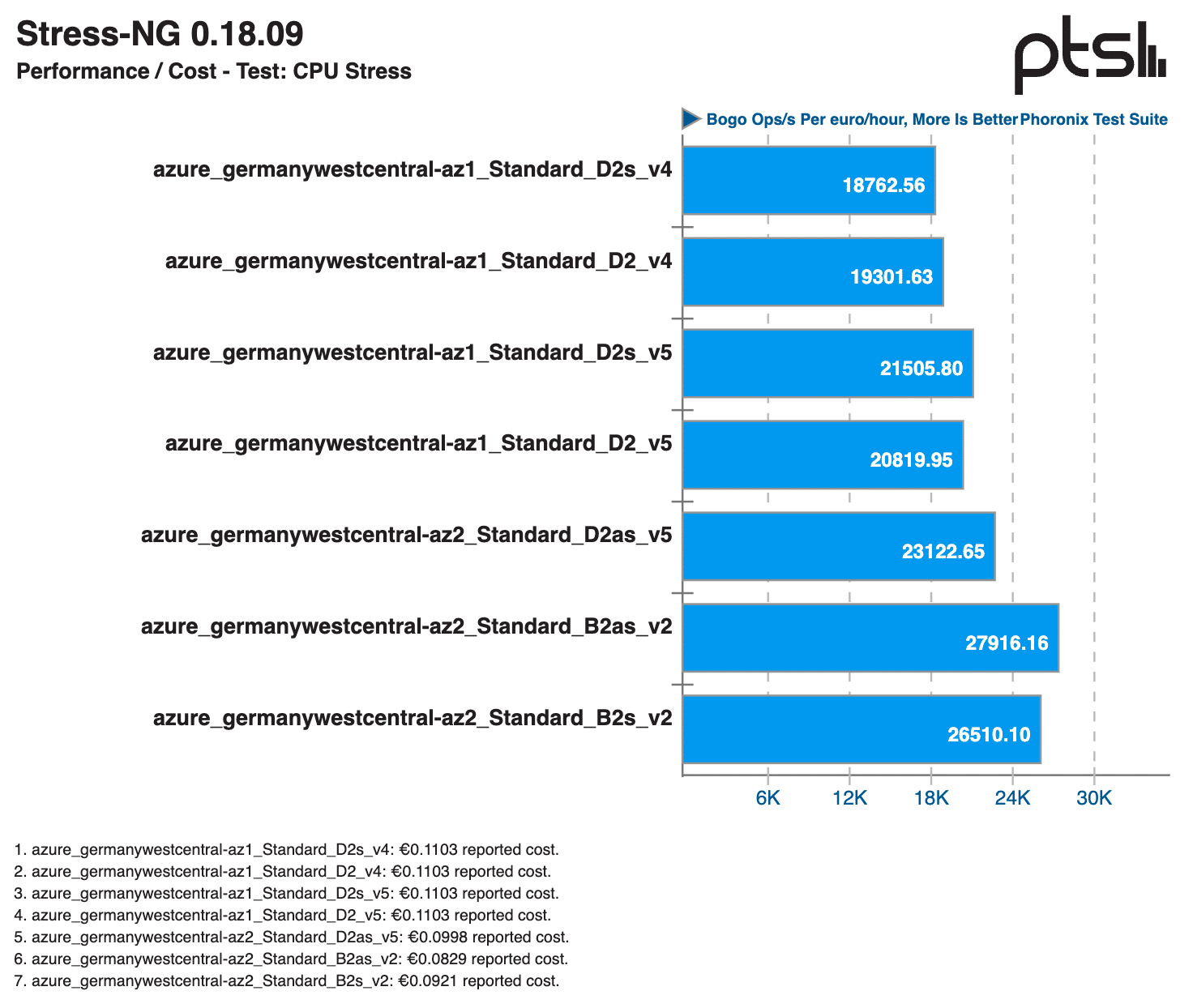

In addition to real-world workloads, we also ran synthetic benchmarks on cloud servers to measure raw system performance in a controlled environment. These included:

- CPU benchmarks

- Memory benchmarks

- Storage benchmarks

- Network benchmarks

By combining both real-world and synthetic benchmarks, we ensured a balanced evaluation that captured both theoretical performance capabilities and practical workload handling.

Cloud Benchmark Tools

Geekbench

Overview: Geekbench measures CPU and GPU performance, simulating real-world tasks such as encryption, photo editing, and machine learning. It provides cross-platform compatibility and normalized results for architecture comparisons.

Cloud Server Performance Benchmarks: While Geekbench can run on servers, we found it unsuitable for cloud instances with high CPU counts, where its results were not indicative of typical server process performance. Consequently, Geekbench was dropped from our benchmark suite early in the evaluation process.

Link: https://www.geekbench.com/

Yet-Another-Bench-Script (yabs.sh)

Overview: Yabs.sh automates the execution of Geekbench, fio, and iperf3 without requiring external dependencies or elevated privileges.

Cloud Server Benchmarks: We leveraged yabs.sh to execute Geekbench on test servers quickly and efficiently, although its limited scope made it less comprehensive for cloud environments.

Link: https://github.com/masonr/yet-another-bench-script

Phoronix Test Suite (PTS)

Overview: PTS is an open-source benchmarking platform that supports a wide range of tests for CPU, GPU, memory, and storage. It integrates with OpenBenchmarking.org a public Benchmark Database and supports batch testing with automated result validation.

Cloud Server Benchmarks: Despite not being cloud-native, Phoronix Test Suite emerged as the best-fitting tool for our benchmarks. Its ability to conduct comprehensive, repeatable tests and self-verify results provided valuable insights. To maintain economic feasibility, we reduced benchmark runtime to a maximum of 1-2 hours per instance. Additionally, code modifications resolved issues with rounding precision for small VM instances, making PTS highly adaptable to our needs.

Link: https://github.com/phoronix-test-suite/phoronix-test-suite

PerfKitBenchmarker (PKB)

Overview: PerfKitBenchmarker (PKB), initially developed by Google, automates resource provisioning, benchmark execution, and data collection for cloud services.

Cloud Server Benchmarks: Despite its OpenStack integration, PKB Benchmark was not the ideal choice benchmarking STACKIT Cloud. PKB’s philosophy is to create fresh cloud resources for each benchmark step, leading to higher costs as STACKIT bills at minimum one hour per resource. Additionally, integrating Elasticsearch and InfluxDB publishers was difficult due to stale code.

Link: https://github.com/GoogleCloudPlatform/PerfKitBenchmarker

Terraform as “Glue”

Terraform was used to automate the creation of servers and infrastructure components. It handled the benchmark tool execution through provisioners and result collection via SSH/SCP. Terraform’s resource tracking capability allowed us to efficiently recreate error-prone instances.

Challenges and Pitfalls

SSH Connection Issues

Running benchmarks on large batches of instances led to SSH connection failures, likely due to firewall or rate limits triggered by the high volume of traffic. This problem highlighted the challenges of managing hundreds of instances simultaneously.

Queue Management

To address execution order and error tracking, we implemented the task spooler tool (tsp). This Unix utility allowed us to queue benchmark jobs, manage parallel execution, and handle errors efficiently.

Conclusion

Our cloud server benchmark methodology combines careful tool selection, Terraform-based automation, and robust error handling to produce meaningful performance insights. By evaluating the cloud server performance related to price, we offer a nuanced understanding of cloud VM value, ensuring more informed decisions in cloud operations. Despite initial challenges, Phoronix Test Suite proved to be the most adaptable and effective tool for our benchmarking needs.

If you are interested in Cloud Benchmarks or like to discuss systematic cloud infrastructure decisions and cost optimizations feel free to contact our experts.